My Projects

A collection of projects I've built to solve real-world problems and explore new technologies.

Featured Projects

Here are some of my most impactful projects that showcase my skills and passion for innovation.

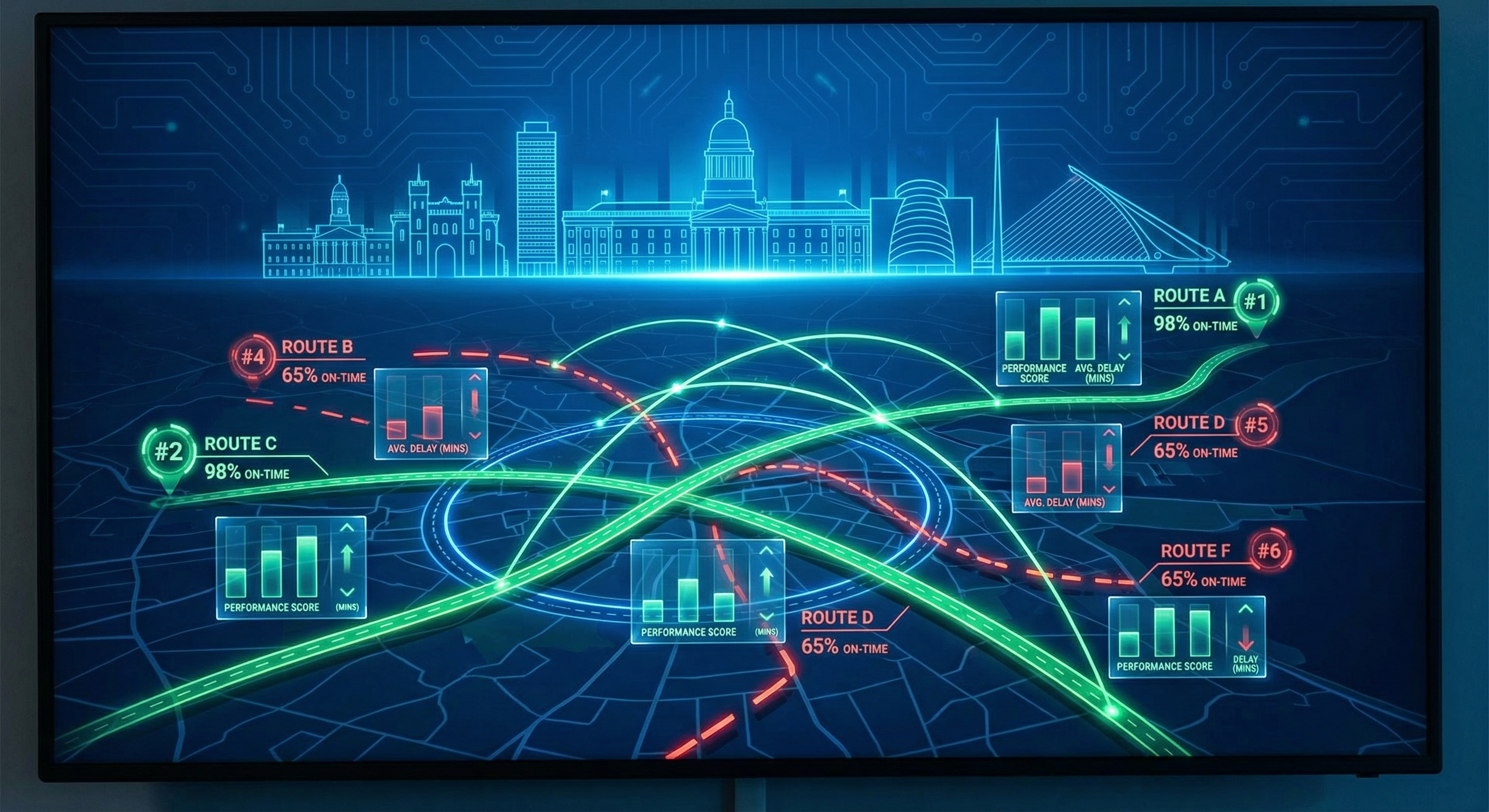

Dublin Bus Real-Time Pipeline

Python + GTFS-RT + SQLite + Streamlit

Complete data pipeline tracking 700+ buses in real-time across Dublin, with interactive dashboard showing delays, routes, and performance analytics.

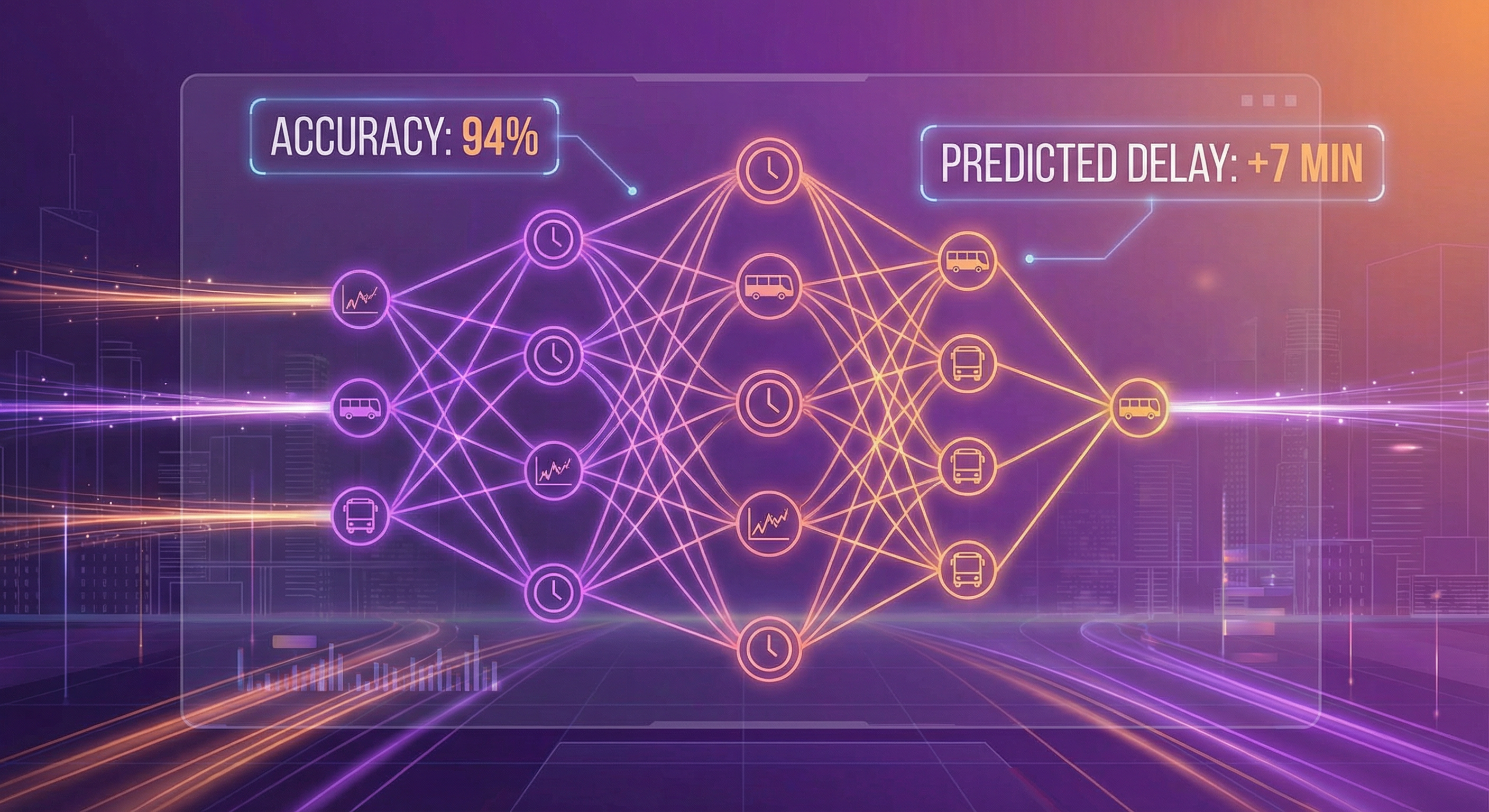

Transit Delay Prediction

XGBoost + Python + Scikit-learn

ML model predicting bus delays 15 minutes in advance with 87% accuracy, using feature engineering on real-time transit data.

Live Code Editor

Try out real data engineering code examples. Click "Run Code" to see the results!

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, when, isnan, isnull

# Initialize Spark session

spark = SparkSession.builder \

.appName("CustomerDataETL") \

.getOrCreate()

# Read data from source

df = spark.read \

.format("jdbc") \

.option("url", "jdbc:postgresql://localhost:5432/customers") \

.option("dbtable", "customer_data") \

.load()

# Data cleaning and transformation

cleaned_df = df \

.filter(col("age").isNotNull()) \

.filter(col("age") > 0) \

.withColumn("age_group",

when(col("age") < 25, "Young")

.when(col("age") < 50, "Middle")

.otherwise("Senior")) \

.withColumn("is_premium", col("purchase_amount") > 1000)

# Write to data warehouse

cleaned_df.write \

.format("parquet") \

.mode("overwrite") \

.save("s3://data-lake/customers/cleaned/")

print(f"Processed {{cleaned_df.count()}} records")Apache Spark ETL Pipeline

Extract, transform, and load data using PySpark

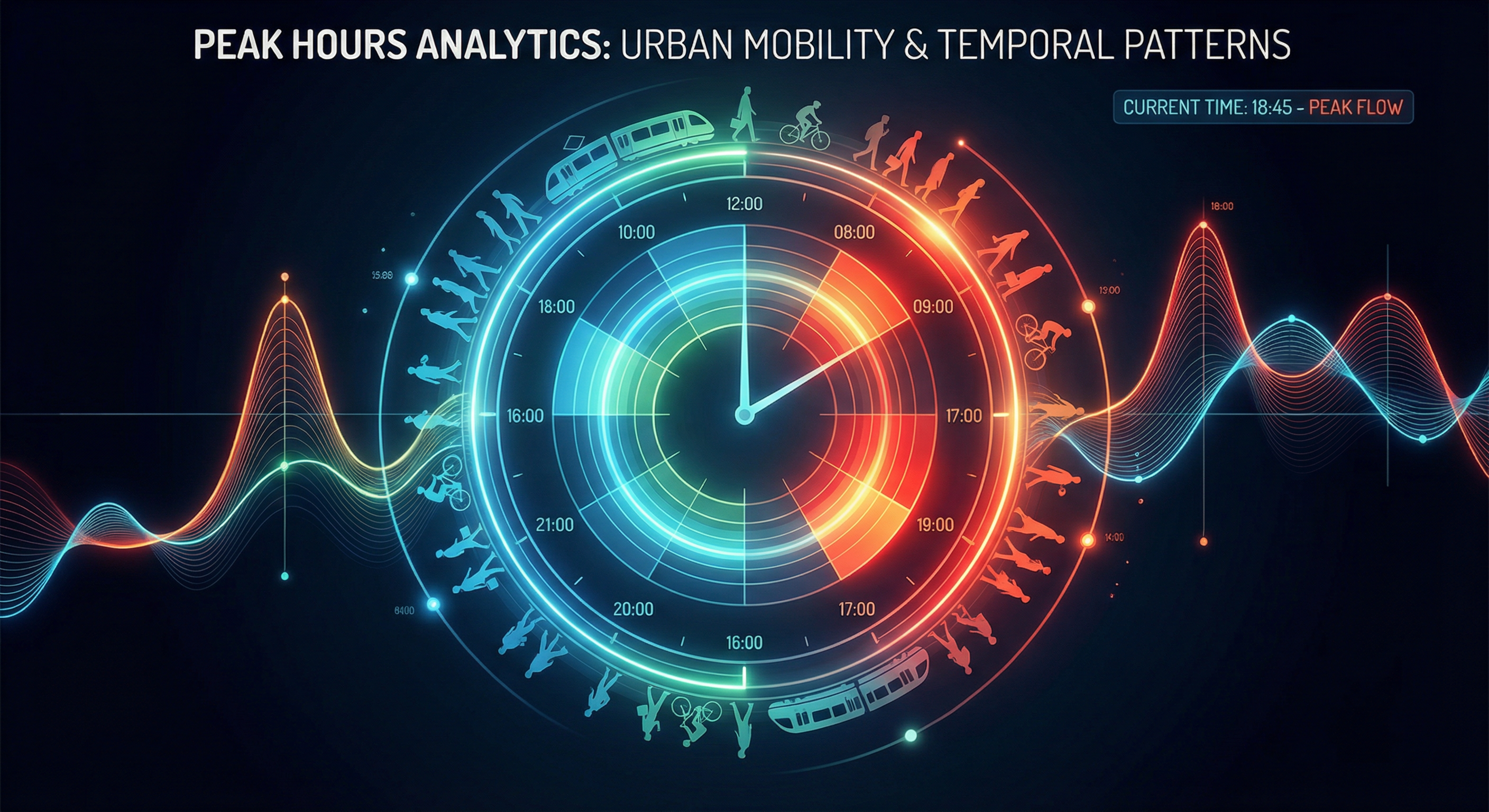

Data Visualization

Interactive data visualizations and analytics dashboards I've created.

Skills & Technologies

Technologies and tools I use to build amazing projects and solve complex problems.

Want the Full Story?

Dive deep into my projects with detailed case studies including architecture decisions, challenges overcome, and measurable business impact.

View Case StudiesMy Projects

Explore my portfolio of data engineering, web development, and ML projects

Category

Status

Complexity

Showing 5 of 5 projects